Like many others, I like to make music. One of the ways I get my inspiration is by analyzing my favorite pieces of music - melodically, harmonically, texturally - and trying to glean insight that I can apply to my own work. One major thing to look at when analyzing music is harmony - often specifically in terms of chord progressions. As part of my analysis, I transcribed all the chords for every track of one of my favorite soundtracks, Ori and the Will of the Wisps. While I learned a lot from looking at this data myself, I wondered if a computer could learn from this data too, and maybe generate chord progressions in the style of its training data. This is what Cadence does. But for me, making Cadence wasn't just about plugging data into a model and getting some output - I had already done that with a Markov model with a much simpler script - it was about creating a very polished interface for this functionality that other musicians would actually use. Cadence allows musicians to curate and share training data, create and train models, and be inspired by ML suggestions, all through an interface that I consider some of my best work.

Check out the Cadence demo or source code.

Cadence uses SolidJS, which is like React but better (aside from the smaller ecosystem). Code is written in TypeScript and CSS, and built with Vite and PostCSS. The TensorFlow.js library is used for ML models.

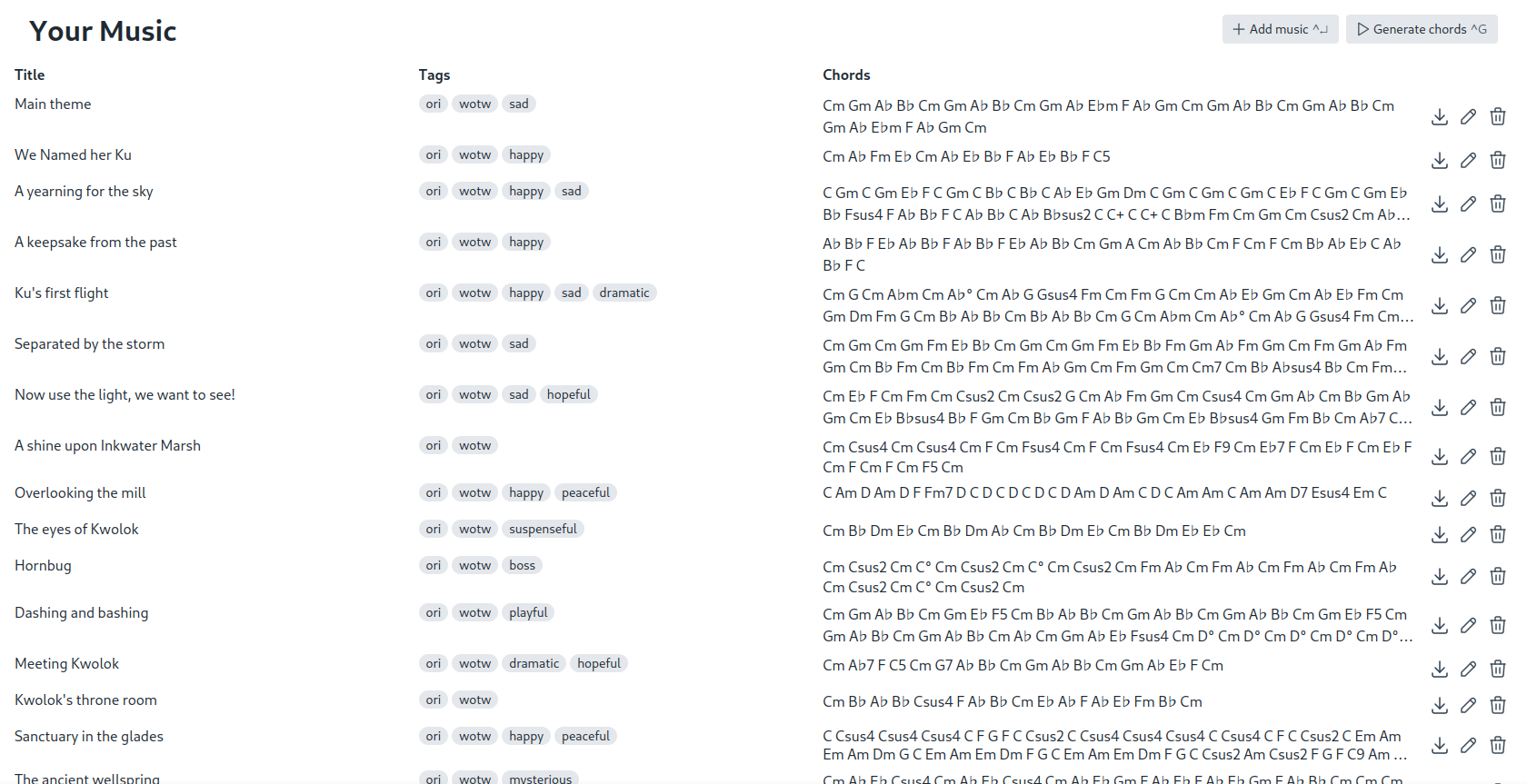

The main interface shows the list of pieces of music used as training data. Users can save and load these as JSON files, if they want to share them with each other. Files can be dragged and dropped onto the interface. Pressing Ctrl-Enter will activate the dialog form to add a new piece of music, and pressing Ctrl-G allows users to train a new model, or to use one they've trained before.

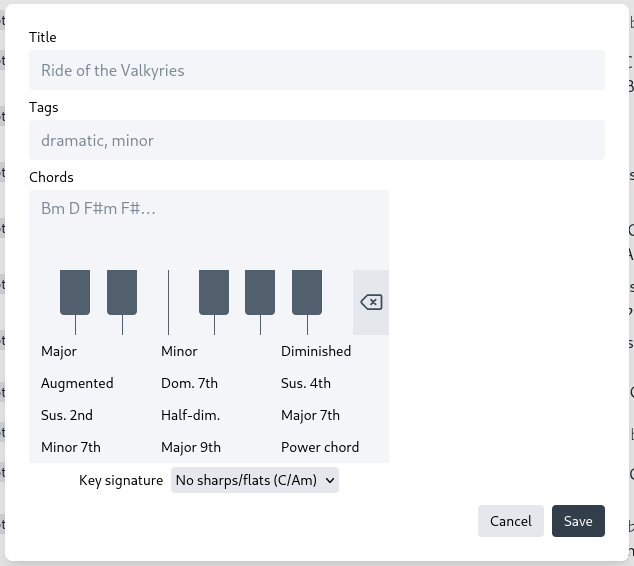

This UI is shown when adding or editing a piece of music from the training data collection. The user is asked to input a title, any tags to be used for fine-grained selection of training data later, and a sequence of chords. Most of it is very simple, but what I'd like to highlight here is the chord progression input. When typing, it makes a number of automatic corrections from what's easy to type to what is musically conventional. For example, it corrects lowercase "b" to "♭", "#" to "♯", "halfdim" or "m7b5" to "ø", "dim" to "°". It also checks input validity and places red squiggly lines under invalid chords. When copying or pasting, the editor makes these same corrections and checks, and also ensures that there are no extra or missing spaces between chords. For mobile users, the input method is different. Cadence disables the default UI keyboard for the textarea, and instead allows mobile users to input chords using the interface below the textarea. Clicking a note on the piano keyboard adds a new chord where the cursor is, with its default quality being dependent on the selected key signature. (The key signature selector only exists for this reason and does not affect the training data.) For example, if the user has selected a key signature with no sharps or flats, then if they click C it will be a major chord, D will be minor, E will be minor, F will be major, etc. The backspace button deletes one chord at a time, unless the user has selected a range of chords to delete. Chord qualities can be changed from the default by selecting one in the grid below.

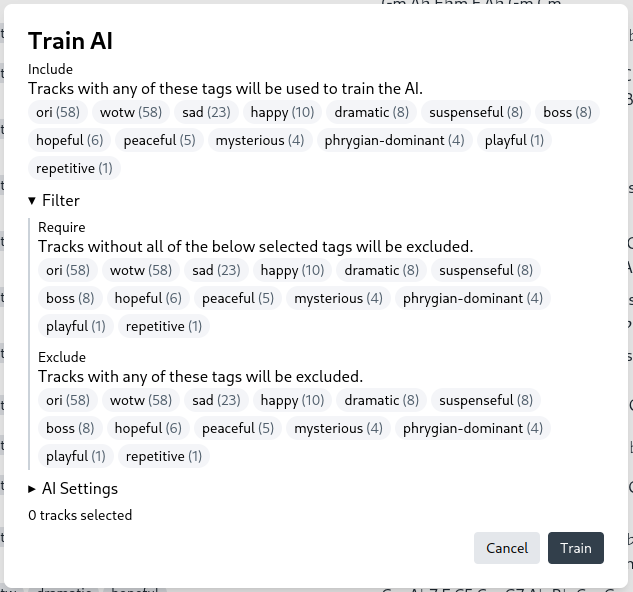

First the user is given options to select their training data based on tags on individual tracks. This helps them train a model that is useful to the style they are writing in.

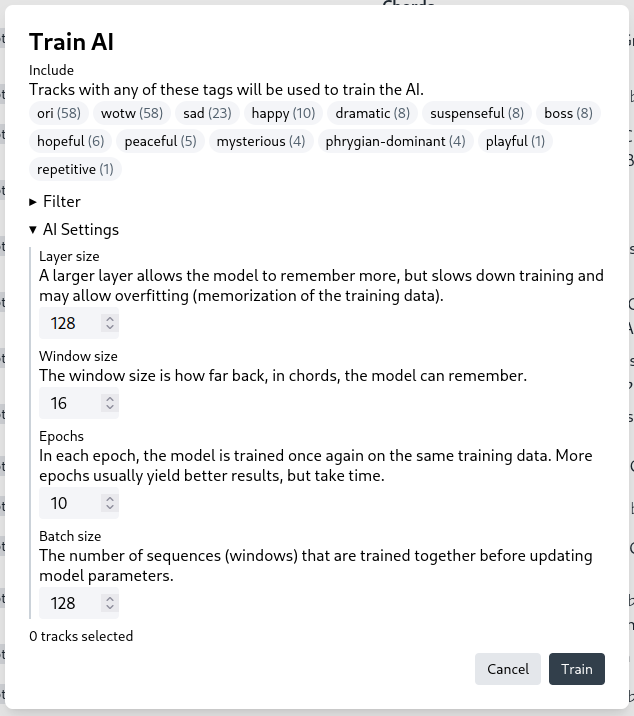

The user can also customize the training process in the "AI settings" section. They can choose the number of epochs to train for, the learning rate, the batch size, and the window size. The window size is the number of chords that the model will use to predict the next chord. A longer sequence length may help the model learn more complex patterns, but it may also make training slower and require more data.

After clicking "Train AI", the user is shown a progress bar. When training is complete, the user is shown the next dialog which allows them to use and save the model.

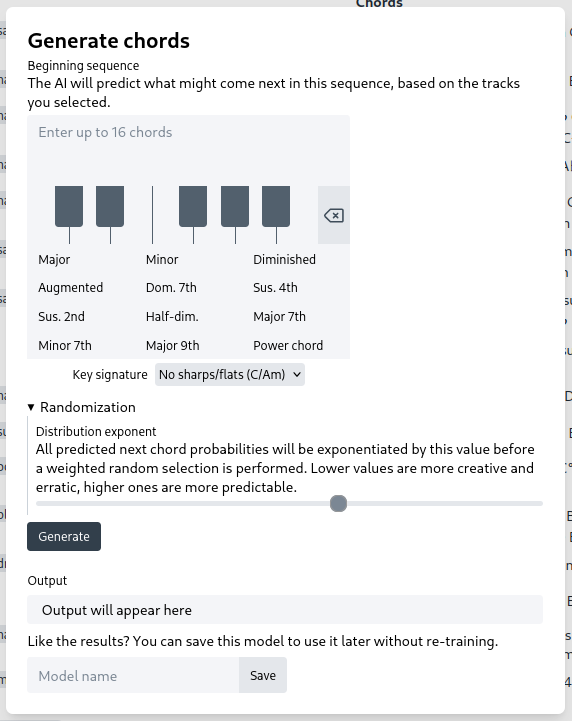

Next, the user may interact with the newly trained model. The model will autocomplete a chord progression from the beginning sequence entered, which is allowed to be empty. They can also adjust the randomization exponent of the model to control whether it is produces output that is predictable and true to the training data or more erratic and creative. Finally, they can save the model if desired.

The most difficult part of this whole project was probably the chord progression editor. No other UI element like this exists to my knowledge, so I was on my own in implementation. I had to figure out how to make it maximally intuitive for desktop users who type everything in with their keyboard while still enforcing a style that is machine-parseable, and also as easy as possible for mobile users for whom typing with their default keyboard would be prohibitively slow. The chord progression editor needs to do a lot in the way of continuously validating, formatting, and standardizing user input, all while supporting two entirely different input methods.

Additionally, I had never worked with TensorFlow.js before. At the time of creating this, I wasn't too familiar with ML in general either. One major decision I had was on how chords would be represented as vectors; I chose one-hot encoding for the chord root and quality. For the training process, I realized that chord progressions are invariant with respect to key, so I multiplied the amount of training data times 12 with each copy transposed up or down some semitones.